Introduction to Kafka Stream Processing Link to heading

Apache Kafka has grown in popularity for its ability to handle high throughput of data in real-time. One of its most powerful features is Kafka Streams, which allows developers to process and transform data streams in a scalable and fault-tolerant manner. In this blog post, we will delve deep into Kafka Stream Processing, exploring its core concepts, architecture, and practical applications.

Table of Contents Link to heading

- What is Kafka Stream Processing?

- Core Concepts

- Architecture

- Setting Up Kafka Streams

- Use Cases

- Conclusion

What is Kafka Stream Processing? Link to heading

Kafka Stream Processing is a client library for building applications and microservices, where the input and output data are stored in Kafka clusters. It allows you to process data in real-time, reacting to changes as they happen. It integrates natively with Kafka, offering a simple yet powerful model for data transformation and enrichment.

Core Concepts Link to heading

Streams and Tables Link to heading

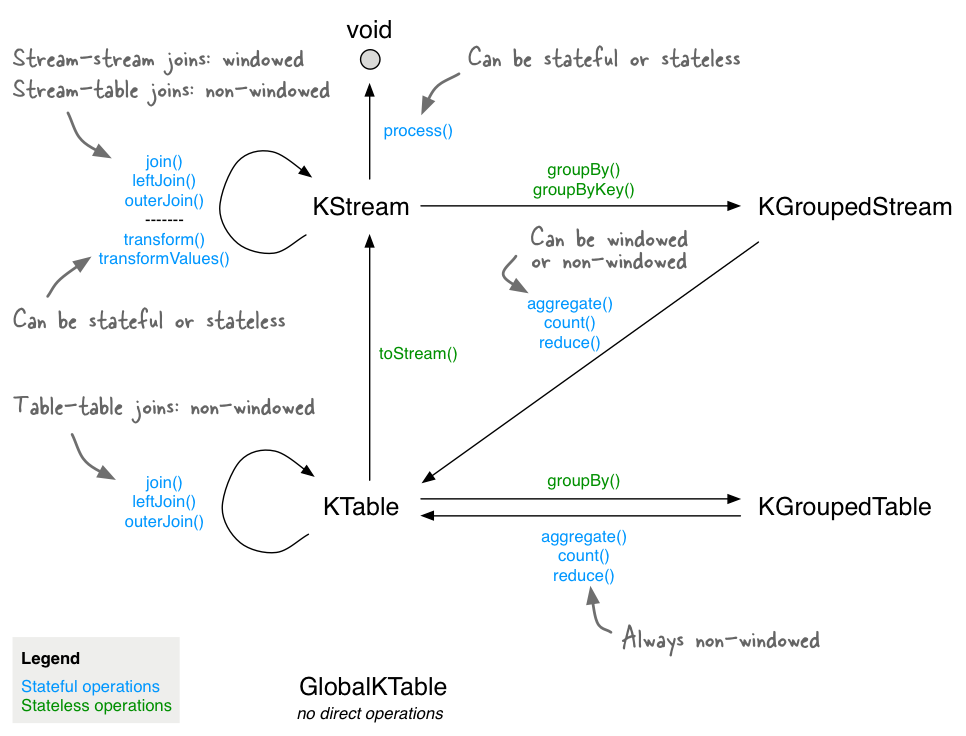

Streams and tables are the fundamental abstractions in Kafka Streams. A stream is an unbounded sequence of events, while a table represents a collection of key-value pairs that are continuously updated.

KStream and KTable Link to heading

In Kafka Streams, KStream and KTable are the main primitives for stream processing:

- KStream: Represents an unbounded, continuously updating stream of records.

- KTable: Represents a changelog stream of updates, where each record is an update of the previous state.

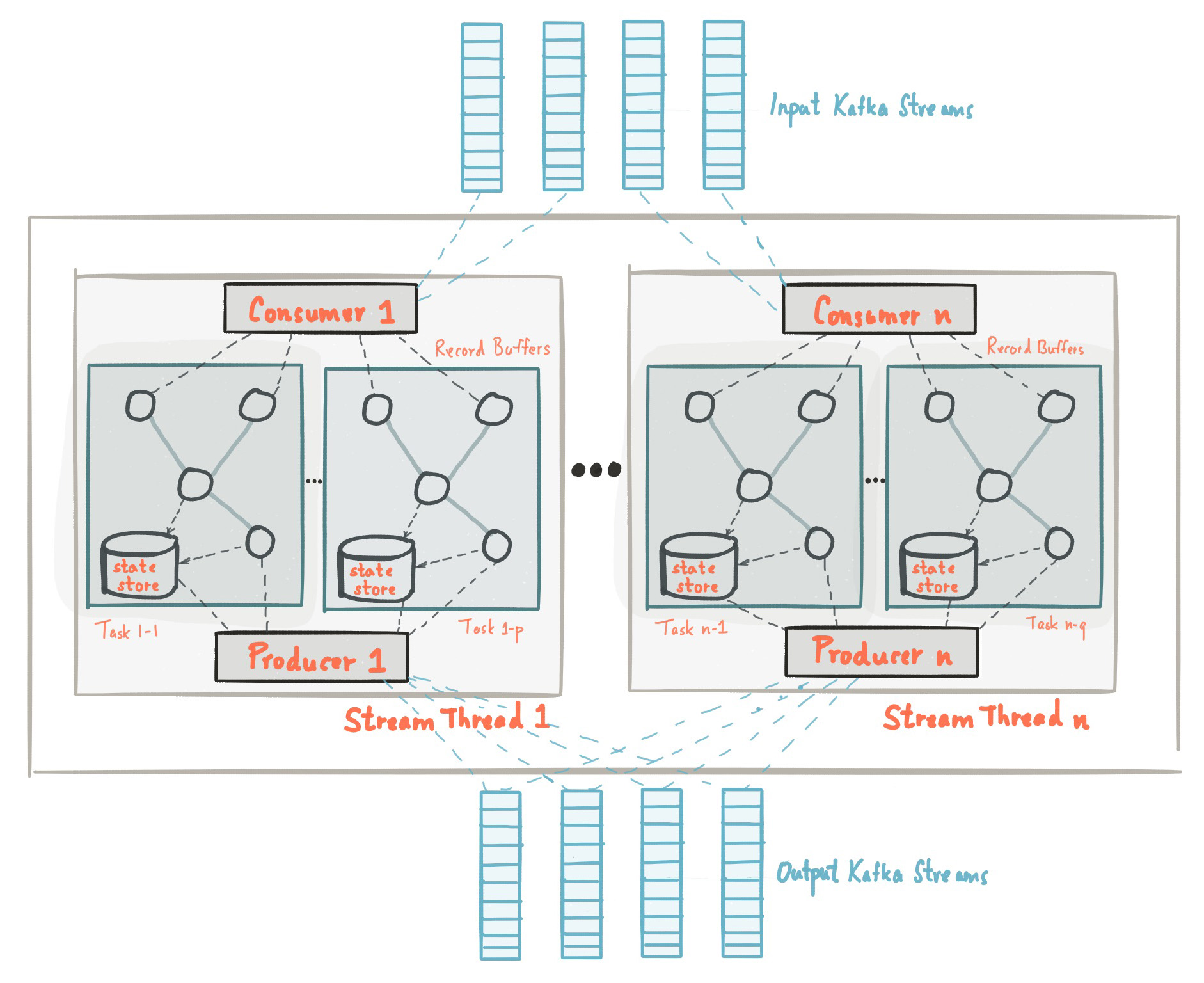

Architecture Link to heading

Kafka Streams API Link to heading

The Kafka Streams API is designed to process data streams in a distributed and fault-tolerant manner. It abstracts the complexities of stream processing, allowing developers to focus on business logic.

Stateful vs Stateless Processing Link to heading

- Stateless Processing: Each record is processed independently without maintaining any state.

- Stateful Processing: The application maintains some state information across multiple records, enabling complex operations like aggregations and joins.

Setting Up Kafka Streams Link to heading

Prerequisites Link to heading

Before diving into the code, ensure you have the following:

- Apache Kafka installed and running

- Java Development Kit (JDK) 8 or higher

- Maven or Gradle for dependency management

Code Example Link to heading

Here is a simple example of a Kafka Streams application in Java:

import org.apache.kafka.common.serialization.Serdes;

import org.apache.kafka.streams.KafkaStreams;

import org.apache.kafka.streams.StreamsBuilder;

import org.apache.kafka.streams.StreamsConfig;

import org.apache.kafka.streams.kstream.KStream;

import java.util.Properties;

public class SimpleStreamProcessing {

public static void main(String[] args) {

Properties props = new Properties();

props.put(StreamsConfig.APPLICATION_ID_CONFIG, "stream-processing-app");

props.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG, "localhost:9092");

props.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());

props.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG, Serdes.String().getClass());

StreamsBuilder builder = new StreamsBuilder();

KStream<String, String> sourceStream = builder.stream("input-topic");

sourceStream.to("output-topic");

KafkaStreams streams = new KafkaStreams(builder.build(), props);

streams.start();

Runtime.getRuntime().addShutdownHook(new Thread(streams::close));

}

}

This example reads data from an input-topic, processes it, and writes the results to an output-topic.

Use Cases Link to heading

Real-Time Analytics Link to heading

Kafka Streams can be used to perform real-time analytics on streaming data. For instance, you can monitor user activities on a website in real-time, generating insights and making data-driven decisions.

Event-Driven Microservices Link to heading

Kafka Streams is also ideal for building event-driven microservices. By processing events as they occur, you can maintain the state and react to changes efficiently.

Conclusion Link to heading

Kafka Stream Processing is a robust solution for real-time data processing, enabling developers to build scalable and fault-tolerant applications. By understanding its core concepts and architecture, you can leverage Kafka Streams to transform and analyze data streams effectively.

For further reading, you can check out the official Kafka documentation.